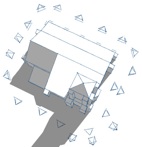

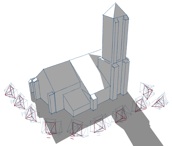

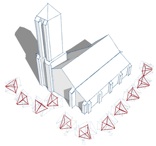

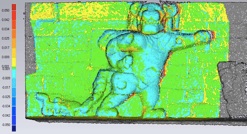

The pipeline consists of automated tie point extraction, bundle adjustment for camera parameters derivation, dense image matching for surface reconstruction and orthoimages generation. The single steps of the 3D reconstruction pipeline have been investigated in different researches producing accurate metric results in terms of automated markerless image orientation and dense image matching].

. Acquisition and camera calibration protocol

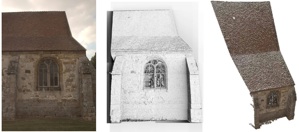

The pipeline is primarily focused on terrestrial applications, therefore on the acquisition and processing of terrestrial convergent images of architectural scenes and heritage artifacts. The employed digital camera needs to be preferably calibrated in advanced following the basic photogrammetric rules in order to achieve precise and reliable interior parameters. The acquisition protocols are reported in section «Protocols».